Oculus CTO John Carmack has developed a new approach to VR video playback which makes it possible to play 5K 3D 360 video on headsets like Oculus Go and Gear VR. The results, as shown in the recent re-release of Henry, are very impressive. In a new blog post, Carmack breaks down how the player works and offers up a sample for developers interested in using the technique.

If you download the latest version of Henry on Go or Gear VR, you’ll see a scene with quality which looks nearly as sharp as real-time rendering, but with much greater detail than could be rendered on a mobile VR headset, thanks to the fact that you’re actually looking at pre-rendered footage. Not only is the detail impressive, but the 60 FPS playback makes things look smooth, and it’s 3D to boot.

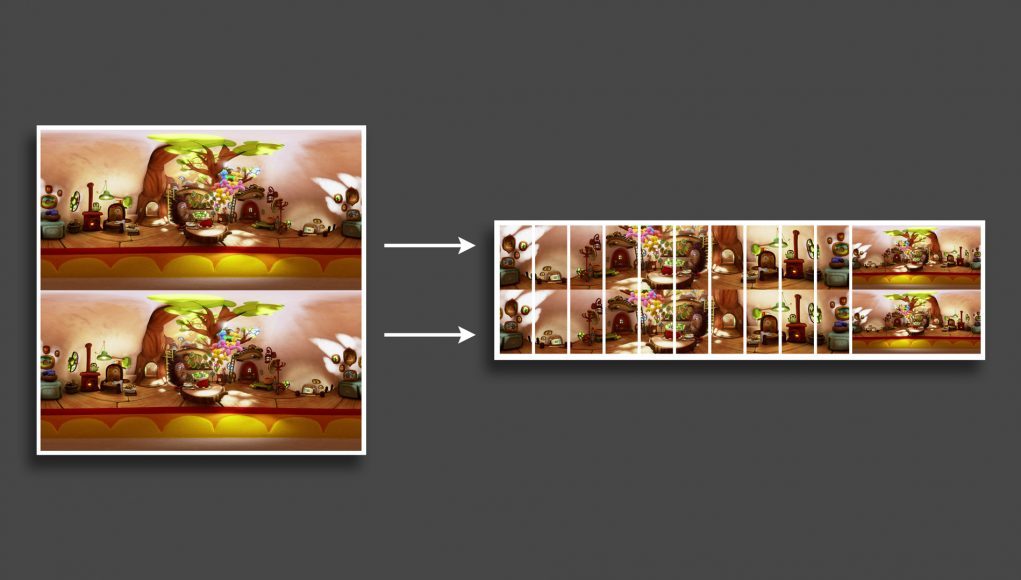

This new bar in quality is thanks to a novel technique to playing back 360 video which aims for maximum fidelity with minimal sacrifice elsewhere. Developed by Oculus CTO John Carmack, the new player eschews common projections like equirectangular in favor of a novel scheme which simultaneously decodes four video streams: a ‘base layer’, and three high resolution strips which shift as the viewer looks around the scene. Carmack explains in a post on the Oculus blog:

As a base layer, the entire 5k master is resampled to a 2k x 2k version that is always available, so even if you spin around 180 degrees in a split second, there will always be something sensible to see. If you look at the ceiling or floor, this version is all that you will see.

The key center regions are cropped directly out of the 5k x 5k master, pixel for pixel with no resampling. These are split into ten independent video files, a 512 x 1024 pixel column from each eye, stacked and transposed to make 2048 x 512 video strips.

The total file size is roughly what a traditional 5k x 5k encoding would be because skipping the poles balances out the extra gop keyframes and extra low detail copy of the middle.

He notes that this approach effectively serves up a central band of high resolution footage in a circle around the user, but sacrifices quality toward the top and bottom of the image, which will make the approach less suitable for certain video production that make heavy use of that space. Carmack explains why he decided to make this particular sacrifice compared to something else (like reducing frame rate, going from 360 to 180, or dopping 3D):

The key compromise in the current scheme is to accept lower resolution at the top and bottom of the sphere. I justify this in several ways:

- Most people don’t look very far up or down when watching 360 video. Of course, you can follow this a little farther and say they don’t look left and right all that much either, and you wind up with the strong argument for 180 video, but 360 is still an incremental value.

- The down direction is often blacked out to hide the camera rig.

- For 3D 360 videos, directors are strongly urged to keep people from looking up or down, because the stereo 3D effect has to be ramped down before you can see the poles and have your eyes try to go backward.

- The uneven equirect projection at the poles can still give you some “extra” resolution, at least in one axis, so the bug becomes sort of a feature.

Still, it is a compromise, and some videos won’t work well in this format. An obvious example would be putting crisp text on the ceiling or floor. In exchange, we get a situation where view dependency in just the yaw angle is sufficient, which allows a system with minimal data duplication, so it can work on local devices instead of just massive servers.

“I am very excited at the prospect of unlocking quality that has laid dormant in so many high-end 360 productions made over the last several years that have only been seen at terribly degraded quality,” Carmack concludes. “Dust off those old studio masters!”

For developers who want to experiment with the new playback tech, Oculus has published a sample, which they’re calling the VR 5K Player, which shows developers how to build video files to work with the player, and the player itself.