Oculus Research, the VR and AR R&D division of the company, today announced a new display technology that they’re calling the “Focal Surface Display.” The display aims to improve the vergence-accommodation conflict that plagues today’s VR headsets. The company calls the work “groundbreaking.”

Oculus Research has published a paper and will present the research on the focal surface display at the SIGGRAPH conference this July. A video released by Oculus (heading this article) gives a brief explanation of what the display achieves. An accompanying blog post offers additional detail.

Focal surface displays mimic the way our eyes naturally focus at objects of varying depths. Rather than trying to add more and more focus areas to get the same degree of depth, this new approach changes the way light enters the display using spatial light modulators (SLMs) to bend the headset’s focus around 3D objects—increasing depth and maximizing the amount of space represented simultaneously.

All of this adds up to improved image sharpness and a more natural viewing experience in VR.

[…]

By combining leading hardware engineering, scientific and medical imaging, computer vision research, and state-of-the-art algorithms to focus on next-generation VR, this project takes a highly interdisciplinary approach—one that, to the best of our knowledge, has never been tried before. It may even let people who wear corrective lenses comfortably use VR without their glasses.

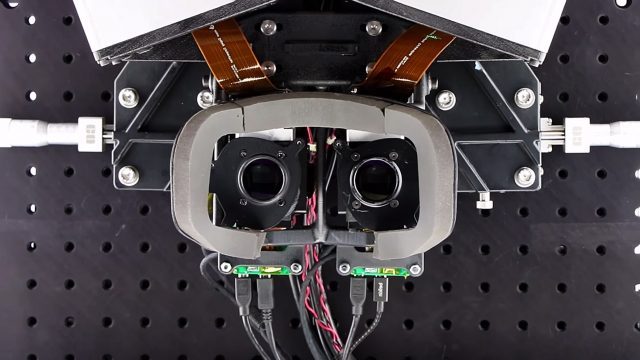

The researchers are employing a spatial light modulator which appears to be able to selectively bend light to change its focus for select parts of the image.

The research paper, authored by Oculus Research scientists Nathan Matsuda, Alexander Fix, and Douglas Lanman, concludes with the following.

Focal surface displays continue down the path set by varifocal and multifocal concepts, further customizing virtual images to scene content. We have demonstrated that emerging phase-modulation SLMs are well-prepared to realize this concept, having benefited from decades of research into closely-related adaptive imaging applications. We have demonstrated high-resolution focal stack reproductions with a proof-of-concept prototype, as well as presented a complete optimization framework addressing the joint focal surface and color image decomposition problems. By unifying concepts in goal-based caustics, retinal scanning displays, and other accommodation-supporting HMDs, we hope to inspire other researchers to leverage emerging display technologies that may address vergence-accommodation conflict in HMDs.

While not a perfect fix for the vergence-accommodation conflict, Oculus is pitching the display tech as a “middle ground” between today’s VR displays and one with ideal properties to deal with vergence-accommodation conflict.

“While we’re a long way out from seeing results in a finished consumer product, this emerging work opens up an exciting and valuable new direction for future research to explore.” Oculus writes in their blog post. “We’re committed to publishing research results that stand to benefit the VR/AR industry as a whole.”

So called ‘varifocal’ displays are a hot research topic right now because they stand to make the light emitted by a VR headset much closer to the light we see in the real world, allowing our eyes to focus more naturally and comfortably on the virtual scene. The same technology could also be used to eliminate the need for glasses while using a VR headset.

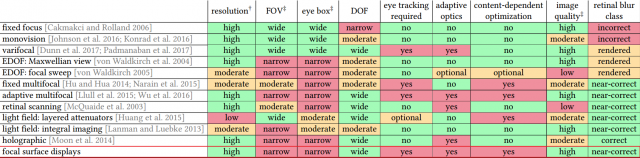

The focal surface display approach does require eye-tracking, which itself is not a completely solved issue. The researchers are also quick to admit that the technique is difficult to achieve with a wide field of view; above you can see their assessment of characteristics of a number of different techniques that have been devised to achieve a varifocal display.

The focal surface display approach does require eye-tracking, which itself is not a completely solved issue. The researchers are also quick to admit that the technique is difficult to achieve with a wide field of view; above you can see their assessment of characteristics of a number of different techniques that have been devised to achieve a varifocal display.

Primer: Vergence-Accommodation Conflict

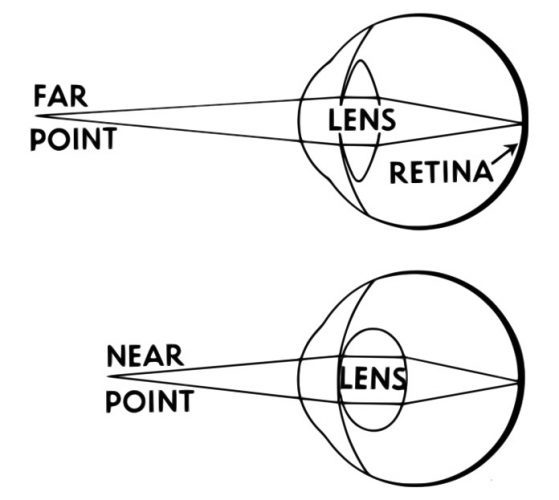

In the real world, to focus on a near object, the lens of your eye bends to focus the light from that object onto your retina, giving you a sharp view of the object. For an object that’s further away, the light is traveling at different angles into your eye and the lens again must bend to ensure the light is focused onto your retina. This is why, if you close one eye and focus on your finger a few inches from your face, the world behind your finger is blurry. Conversely, if you focus on the world behind your finger, your finger becomes blurry. This is called accommodation.

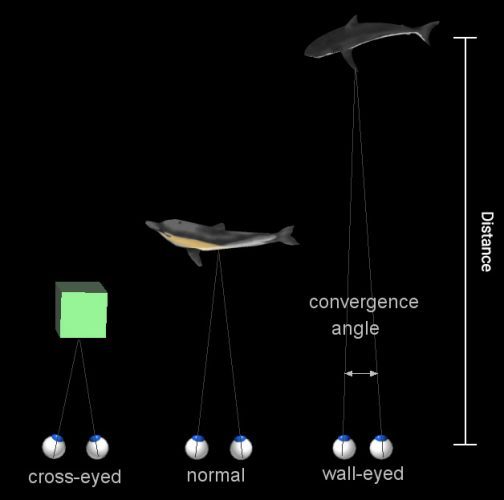

Then there’s vergence, which is when each of your eyes rotates inward to ‘converge’ the separate views from each eye into one overlapping image. For very distant objects, your eyes are nearly parallel, because the distance between them is so small in comparison to the distance to the object (meaning each eye sees a nearly identical portion of the object). For very near objects, your eyes must rotate sharply inward to converge the image. You can see this too with our little finger trick as above; this time, using both eyes, hold your finger a few inches from your face and look at it. Notice that you see double-images of objects far behind your finger. When you then look at those objects behind your finger, now you see a double finger image.

But when it comes to most of today’s AR and VR headsets, vergence and accommodation are out of sync due to inherent limitations of the optical design.

In a basic AR or VR headset, there’s a display (which is, let’s say, 3″ away from your eye) which makes up the virtual image, and a lens which focuses the light from the display onto your eye (just like the lens in your eye would normally focus the light from the world onto your retina). But since the display is a static distance from your eye, the light coming from all objects shown on that display is coming from the same distance. So even if there’s a virtual mountain five miles away and a coffee cup on a table five inches away, the light from both objects enters the eye at the same angle (which means your accommodation—the bending of the lens in your eye—never changes).

That comes in conflict with vergence in such headsets which—because we can show a different image to each eye—is variable. Being able to adjust the imagine independently for each eye, such that our eyes need to converge on objects at different depths, is essentially what gives today’s AR and VR headsets stereoscopy. But the most realistic (and arguably, most comfortable) display we could create would eliminate the vergence-accommodation issue and let the two work in sync, just like we’re used to in the real world.