Oculus VR has launched a preview build of their latest Oculus Rift SDK, v.0.3.1. The new SDK adds initial support for the Oculus Rift DK2, improved distortion quality, John Carmack’s ‘Timewarp’ technique to reduce latency, and much more.

The Oculus Rift SDK v0.3.1 preview is only available for Windows and can be downloaded from the Downloads section of the Oculus VR developer portal. Oculus has made available the Unity Tuscany Demo and Unity 4 integration for the preview. The full release of the Oculus Rift SDK v0.3.1 will support Mac and Linux, and we should see an official Unreal Engine 4 (UE4) integration from Oculus as well.

While initial support for the Oculus Rift DK2 has been added, Oculus says that positional tracking has not yet been implemented into the SDK. The full changelog for the update can be found at the bottom of this article.

Oculus has also updated their SDK Overview document to detail the latest changes at a technical level.

Reading Between the Lines

New API Calls Return Manufacturer Information—Oculus to Position Rift SDK as Industry Standard?

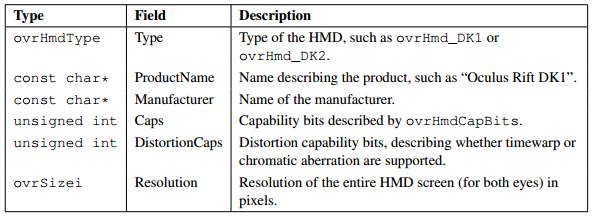

Oculus has significantly redesigned the API that developers use to integrate the Oculus Rift with their application. Part of that redesign includes an augmented set of variables that describe the characteristics of any VR headset that is connected to the system. While the ‘Type’ field makes sense, as devs will want to know going forward which Oculus headset (DK1, DK2, CV1) is connected, the ‘Manufacturer’ field seems overly broad—unless Oculus intends to let other VR headsets interoperate with their SDK.

Distortion Changes Bring a New Look to the Oculus Rift Side-by-Side Format

Note: Comparison photos not taken from the exact same location

With the latest SDK comes a tweak to the side-by-side ‘Oculus Rift format’. The change isn’t monumental but is definitely interesting to see given that the split-screen view has become an icon of the Oculus Rift ever since the DK1 launched about a year ago.

This is part of Oculus’ new ‘SDK Rendered’ mode which makes it easier for developers to implement correct distortion and frame-timing. “This means that developers don’t need to setup pixel and vertex shaders or worry about the details of distortion rendering, they simply provide the device and texture pointers to the SDK,” notes the latest SDK overview.

Developers may also use another rendering mode which Oculus calls ‘Client Rendered.’ This allows developers to handle the distortion rendering within the application itself, same as it has been done previously. “This mode is intended for application developers who may wish to combine the Rift distortion shader pass with their own post-process shaders for increased efficiency, or if they wish to retain fine control over the entire rendering process”

Oculus, however, says that ‘SDK Rendered’ is the preferred option going forward.

John Carmack’s Timewarp Technique

No, John Carmack hasn’t invented time travel (yet). Timewarp is a rendering technique that Carmack has been working on for some time. He’s been talking about Timewarp since before the DK1 was shipping, and wrote in detail about how the technique might be applied back in February of 2013—long before joining Oculus as CTO.

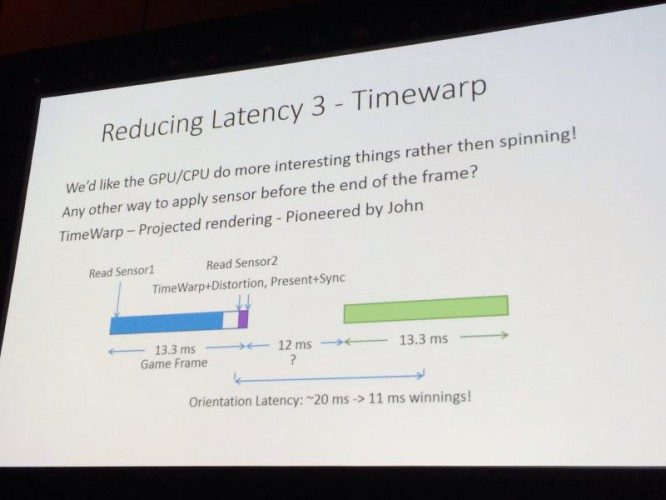

The goal of the method is to reduce ‘motion-to-photon’ latency—how long it takes from the moment you move your head to when the scene rendered by the Rift is updated. We heard more about Timewarp from Oculus at GDC where they revealed that the new rendering technique would be available in their next SDK release. There they described it as such:

- Render the scene first with most recent orientation

- Sample the IMU just before distortion

- Timewarp re-projects the scene for change in orientation

- Can interpolate re-projection matrices for rolling shutter

And if that’s a bit overly technical for you, developers need not worry; the Oculus Rift SDK takes care of time warping so long as the developer is using the SDK Rendered mode for their application.

Oculus says that while Timewarp works for orientation, how to apply it to translation (positional tracking) is still under investigation.

Oculus Configuration Utility

The Oculus Configuration Utility has been updated in conjunction with the new SDK. The Utility now allows the user to indicate the eye-relief setting of the Oculus Rift DK1 (how far the lenses are from the user’s eyes). This info is used with the new distortion technique to maximize distortion quality.

The Oculus Configuration Utility has been updated in conjunction with the new SDK. The Utility now allows the user to indicate the eye-relief setting of the Oculus Rift DK1 (how far the lenses are from the user’s eyes). This info is used with the new distortion technique to maximize distortion quality.

A new Advanced Configuration section of the Utility now allows input of horizontal and vertical ‘Eye to Neck Distance’ to more precisely understand the position of the user’s eyes. The Utility retains the ability to measure the user’s IPD through a guided tool but now recommends that the eye-relief setting be set to its maximum before proceeding.

Full Changelog for Oculus Rift SDK v0.3.1 Preview

- Reorganized all HMD and sensor interfaces to be exposed through a simplified C API. The new API makes it easy to use LibOVR from other languages.

- We now support two approaches to rendering distortion: SDK Distortion Rendering and Client Distortion Rendering.

- SDK Distortion Rendering will be the recommended approach going forward as it gives us the most opportunity for future optimizations and benefiting from evolving hardware. In this mode, the application renders stereo views into one or more render targets, and then the Oculus SDK takes care of distortion rendering, frame timing, buffer swap, and GPU synchronization.

- Client Distortion Rendering now uses a mesh for rendering distortion instead of a coefficient-driven pixel shader. The distortion function is encoded into the UVs of the distortion mesh, thereby moving much of the complexity inside the SDK.

- Distortion quality has been significantly improved by modelling the distortion function using spline curves rather than polynomials. The distortion is also tailored to individual users based on profile settings specified in the Oculus Configuration Utility. Pulled “in” and pulled “out” physical settings of DK1 require different distortion for maximum quality.

- Introduced explicit support for frame timing in the SDK, which enables more accurate sensor prediction. Frame timing is tied to Vsync and is supported through either ovrHmd_BeginFrame/ovrHmd_EndFrame or ovrHmd_BeginFrameTiming/ovrHmd_EndFrameTiming function sets depending on the rendering approach.

- Sensor prediction is now based on absolute time that increments continuously through program execution, as compared to delta time relative to now. Absolute time reduces errors based on execution timing variability.

- Added initial support for the Oculus DK2 headset.

- Introduced a new technique called Timewarp to reduce motion-to-photon latency. This technique re-projects the scene to a more recently measured orientation during the distortion rendering phase.

- The OculusWorldDemo sample now features an interactive settings menu brought up by pressing the Tab key. Users can select a menu item by pressing Enter and then modify it with the Up and Down arrow keys.

- Reorganized the OculusRoomTiny sample to more visibly illustrate SDK use through one source file. OculusRoomTiny sample now supports switching between SDK and Client Distortion Rendering approaches by changing the SDK_RENDER define.