OpenBCI, the neurotech company behind the eponymous open-source brain-computer interface (BCI), are making a new hardware and software platform specifically for immersive headsets.

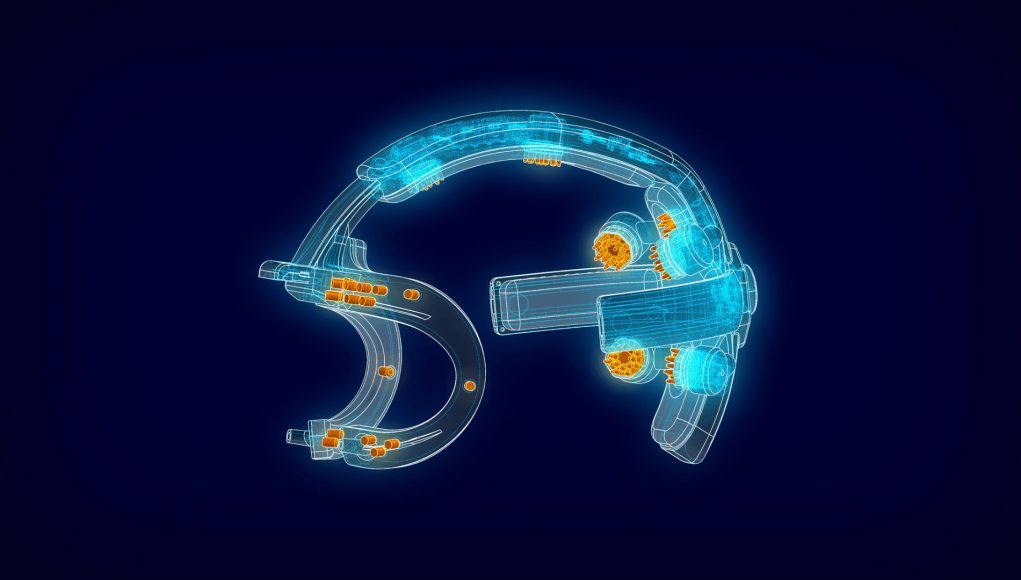

Called Galea, the company says its new hardware is designed to attach to both AR and VR headsets, arriving with a multiple sensors designed to monitor biometric data streams in real time.

Galea is said to include a bevy of sensors, such as electroencephalogram (EEG), electrooculography (EOG) electromyography (EMG), electrodermal activity (EDA), and photoplethysmography (PPG) sensors, which are intended to measure data from the brain, eyes, heart, skin, and muscles.

The company says Galea will allow researchers and developers to measure “human emotions and facial expressions” including happiness, anxiety, depression, attention span, and interest level—and use it to create more immersive content tailored to the individual. The idea is very similar to what we heard from Valve’s resident experimental psychologist Dr. Mike Ambinder during last year’s GDC talk on how BCI will change the nature of game design.

OpenBCI says it will provide researchers and developers early access to Galea, intended for commercial and research applications. Galea will also include SDKs to bring its stream of biometric data into “common development environments,” the company says.

“I believe that head-mounted computers integrated with human consciousness will drive the next major technology paradigm shift,” said Conor Russomanno, CEO of OpenBCI. “Galea is the realization of six years of research and development. We are providing the world with a playground for experimentation and development using multi-modal biometric data in tandem with next generation wearable displays.”

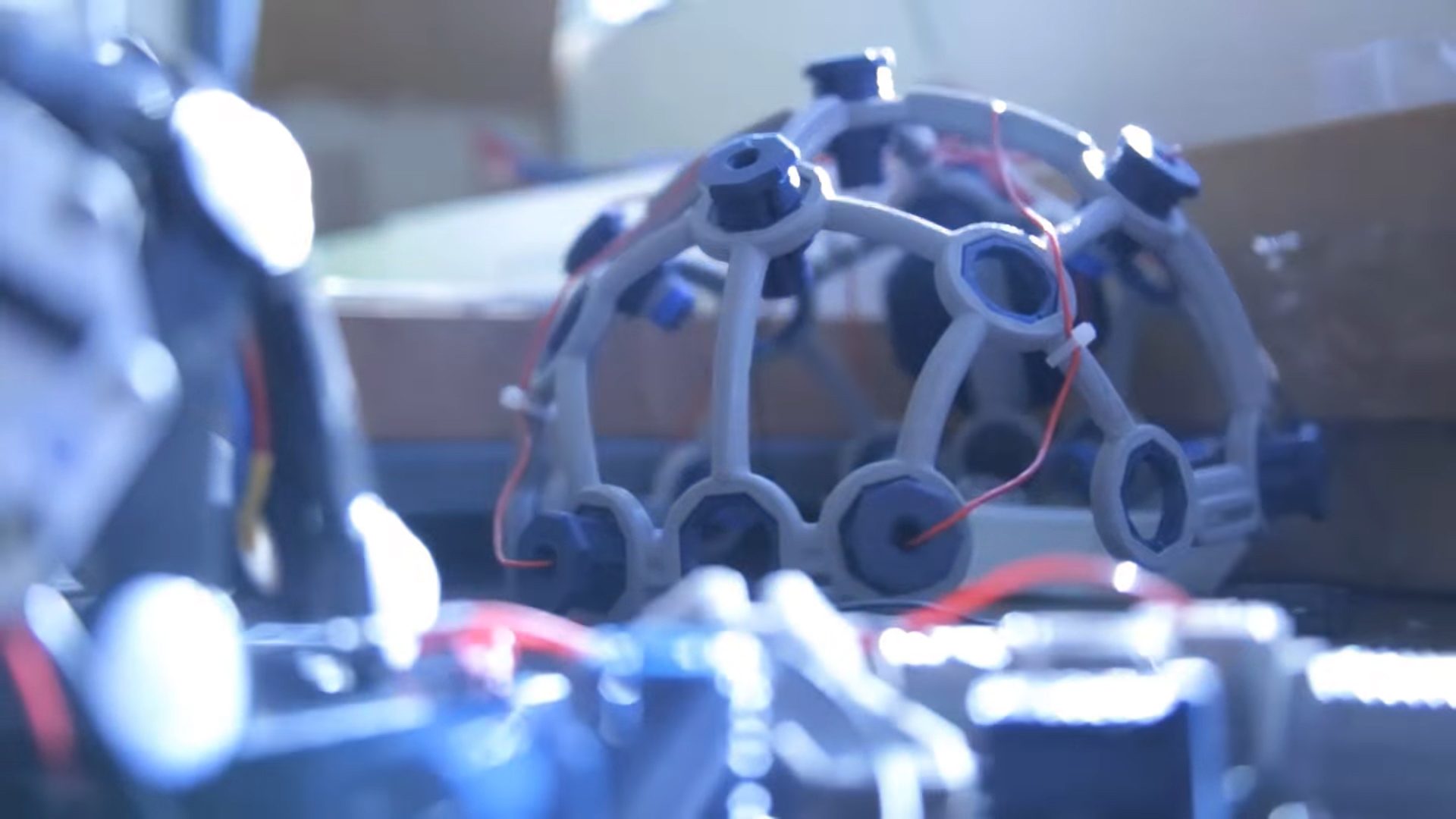

Founded on the back of a successful Kickstarter campaign in late 2013, OpenBCI creates consumer-grade biosensing systems built with open-source software and hardware. Today, the company’s hardware is used by researchers, academic labs, artists, and developers across 89 countries.