Thanks to the tracking cameras on Rift S, the headset also comes with a pass-through video feature which Oculus calls ‘Passthrough+’ (with the ‘+’ denoting its low latency, stereo-correct, wide field of view). While it’s the best pass-through video we’ve seen on any consumer headset so far, the feature will soon be enhanced to take advantage of new capabilities available in NVIDIA’s latest GPUs. Oculus’ motion smoothing tech, Asynchronous Spacewarp, will also be similarly upgraded.

While Quest also has a passthrough video function, Oculus didn’t give it the ‘Passthrough+‘ designation because of its latency and lack of stereo depth. On the Rift S however, the company was able to leverage the power of the PC to computationally solve for the depth of the scene around the user, and then reproject the view accordingly to provide stereo depth. Along with lower latency, this makes Passthrough+ on Rift S look and feel more natural.

On the Oculus Developer Blog the company today explained that Passthrough+ leverages technology the company originally built for Asynchronous Spacewarp (ASW), which keeps motion smooth inside the headset even when framerate is unstable. ASW does this by comparing the motion of previous frames to estimate how that motion should continue into the future (and from there synthesizes a new frame based on the estimation). For Passthrough+, similar tech is used to compare simultaneous frames from the Rift S cameras to understand the distance between objects in each frame, and then infer the depth of the scene (similar to how we understand depth in the real world by perceiving it with two eyes, each with a slightly different perspective).

The method is similar to the what’s used by most video encoding tech, and ASW and Passthrough+ today use the video encoder on NVIDIA and AMD GPUs to do this work ‘asynchronously’ (without impacting the work the GPU needs to do to render the VR world in the first place).

But an upgrade for Passthrough+ and ASW is on the way and will take advantage of capabilities in NVIDIA RTX (and other NVIDIA ‘Turing’ GPUs), which support new optical flow technology from NVIDIA. [Update: Oculus has confirmed the ASW upgrades will also come to original Rift]

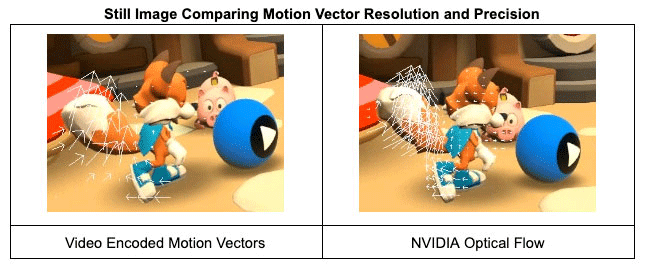

NVIDIA optical flow quadruples the macroblock resolution, increases motion vector resolution, enables following objects through intensity changes, and emphasizes plausible optical flow over compression ratios. The result is half the average end point error than traditional video encoding motion vectors. The qualitative results are equally impressive. With ASW, near-field objects track more reliably. Swinging flashlights hallucinate motion much less frequently and the increased precision means movement is tracked more accurately to individual particles and objects.

The end result, Oculus says, is more accurate motion estimation which means fewer artifacts when ASW is in use.

Similarly, Passthrough+ on Turing GPUs will make use of optical flow upgrades to improve the depth and stability of the view.

For Passthrough+ this means increased stereo resolution of the projected world, while thin objects are correctly tracked and followed. When faced with low contrast or over-exposed areas, NVIDIA optical flow can still infer meaningful disparity values, preventing visual holes or missing data as we estimate the depth of the scene.

This comparison between the old method (left) and new (right) shows how much more stable and accurate the optical flow method is for determining the depth of the scene. (the computation depth reconstruction is inset in each view, enlarge and toggle HD for better visibility) | Courtesy Oculus

Oculus says that the enhancements will launch in June, and be compatible with Turing GPUs running the latest drivers.

Beyond that, the company sounds bullish on what other doors NVIDIA’s optical flow tech may have opened.

This isn’t the end of the line for ASW and optical flow. As demonstrated here, optical flow doesn’t just track movement, but also informs how scenes are arranged spatially. There’s a great deal of information we can glean about environments using optical flow, none of which we can do well with a traditional video encoder approach. The optical-flow SDK opens up many opportunities and research, so be sure to check back for more learnings + insights!