Serious Simulations, a virtual reality, military training solutions provider, say they may have a solution to the tethered desktop virtual reality conundrum. Serious claim that their wireless streaming solution is capable of delivering wireless streaming video at a latency low enough to be suitable for VR.

Serious Simulations are a young company, as many are entering the VR space. Started some 18 months ago by founder and CEO Chris Chambers, the company is aiming to capture a slice of the professional VR market with bespoke immersive systems for the likes of the military. In doing so however, they found that the high-end VR systems available relied on a tethered-to-PC cable solution, one that didn’t fit their requirements.

So, they went away and designed a system which can stream HD video at extremely low latencies to a VR display, low enough they claim to be good enough to replace the (currently unavoidable) cabling.

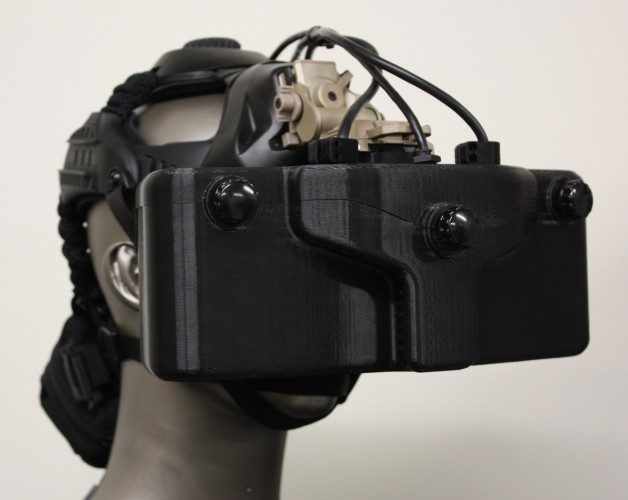

The prototype system Serious was demonstrating at Vision VR/AR Summit last month uses the company’s proprietary video reformatting process to deliver the first pixel from every new frame from computer to HMD in 17 microseconds, using a 60GHz wireless link. SS’ solution requires another unity to be situated in close proximity to the HMD, worn on the user’s person. The extra box receives the image data wirelessly then ‘reformats’ the image for delivery over local HDMI/MIPI interface direct to the HMD’s display.

Serious claims that other wireless video transmission solutions utilising the new 60Ghz, IEEE 802.11ad protocol require full frame buffering on the HMD side, adding 17-22 milliseconds of delay. According to Chambers “until we invented this video formatting procedure and associated hardware, there was at least a 17-22 MILLISECOND PENALTY for manufacturers to go from wires to wireless for their VR displays. That is additional latency on top of their existing pipeline. This explains why no manufacturers are spending resources on true wireless video solutions, since their latency pipelines are already too long.”

By contrast, Serious Solutions’ claimed 17 microsecond for a single 1080p 60Hz feed led them to dub their system ‘zero frame’ latency.

Frank He went hands-on with an Oculus Rift DK2 packing a Serious Solutions’ ‘Zero Frame’ wireless system and sat down to chat with Chambers at Unity’s Vision VR/AR Summit in California last month.

Update: Since this interview was conducted, Serious Simulations have been in touch to give up this update:

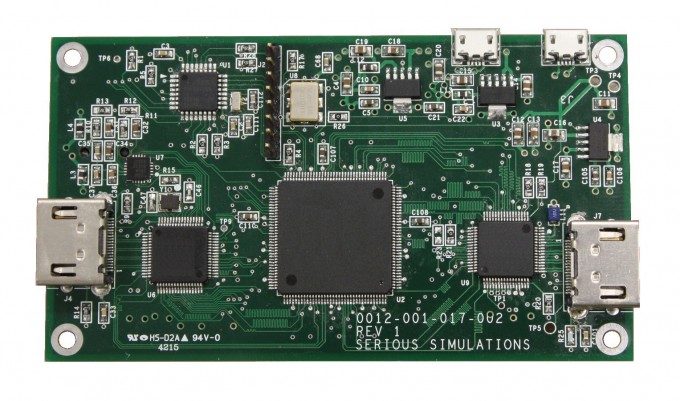

Since then Serious Simulations has begun production of our updated wireless VR processor (we have been calling it the “Gen 2 board”). It has a more powerful FPGA, audio amp, better layout of connectors to make it HMD conversion friendlier, and power management functions. In addition, it lays the groundwork we needed for our next big leap forward in wireless VR, which is to support higher resolutions, and higher refresh rates to stay in sync with the direction of the industry. This latter process is a huge step for us and is patent pending as of February 2016. We are building the prototype now.

Road to VR: Can you introduce yourself, and tell us a little about Serious Solutions’ origin and its focus?

Chambers: I’m Chris Chambers, CEO of Serious Simulations. I founded the company about a year and a half ago to develop professional training solutions using a VR approach, and full human motion and freedom of motion as the main interface. One of the things that we’ve found immediately was that there was not a wireless display option that was adequate for anything we wanted to do in professional training, so we had to develop a lot of that.

We developed our own HMD which has a very wide FOV and high resolution and we put that into a wireless package. The wireless package initially included frame rotation software which enables the link from TV industry wireless links, which are landscape mode, into the display, which is a portrait, or natively portrait device. But that frame rotation software added 17 milliseconds of additional latency. We found that to be unacceptable.

There was no [available] alternate solution so we had to invent a solution. And so we did. We created a video processor system that involves pixel shader code on the GPU that helps to prearrange the image but maintain the landscape mode of a prearranged kind of… They look scrambled on the screen you saw earlier, but it ships out as a landscape mode image, goes over the wireless in landscape and then we have a patented process on the headset itself in the form of a small printed circuit board and that process of descrambling the image and sending it to the screen takes approximately 17 microseconds because we’re only buffering one row of a 1080p display. That’s kind of it in a nutshell. It’s very very fast, mainly because of the buffering only being done at one row rather than one full frame of video.

There was no [available] alternate solution so we had to invent a solution. And so we did. We created a video processor system that involves pixel shader code on the GPU that helps to prearrange the image but maintain the landscape mode of a prearranged kind of… They look scrambled on the screen you saw earlier, but it ships out as a landscape mode image, goes over the wireless in landscape and then we have a patented process on the headset itself in the form of a small printed circuit board and that process of descrambling the image and sending it to the screen takes approximately 17 microseconds because we’re only buffering one row of a 1080p display. That’s kind of it in a nutshell. It’s very very fast, mainly because of the buffering only being done at one row rather than one full frame of video.

Road to VR: With your solution, you sort of want to partner with other companies doing VR, is that right?

Chris: Absolutely. That’s why we’re here. We’re announcing this innovation to the industry and although we’ve only embedded it in our own HMD, we’re a very small business and we are willing to license the IP, sell the IP, or whatever it takes to get this out to other manufacturers who’d like to have a zero latency wireless link.

Road to VR: So I’m assuming that Oculus, Sony, HTC, and, you know, any of the big players have come and… Checked you out?

Chambers: Yes, this has been a great venue for that and we’ve had some interest from all the parties we’ve desired to talk to here. It’s very good.

Road to VR: [Your current demo at the booth] is only working on an Oculus Rift DK2 right now, so for the consumer Oculus Rift, and HTC Vive, they have higher refresh rates and have a lot more pixels that need to be sent over. Will your solution be able to handle that?

Chambers: Well, we are scaling up the solution, and so our next generation, which we’re working on that’s coming out this year, has the ability to get to 90 Hz refresh rates. That involved a slightly different process that gave us more capacity to push that many pixels. And we filed a patent on that process last week.

Road to VR: So hypothetically if you were to integrate that technology into one of the Oculus Rifts or HTC Vives, how much [cost] would be added to the base price of the headset itself?

Chambers: Yeah, it’s almost an impossible question to answer right now…

Road to VR: Just, like a rough ballpark.

Chambers: Well we know where we’d like to go to. We’d like to get down into the low hundreds of dollars so that this becomes consumable by… You know mass consumption. And we’re not there yet but it can be with quantity – it can be very affordable. As a small business we don’t have the quantities to justify the low price just yet.

Road to VR: And also, do you know about eye tracking technology?

Chambers: Yeah.

Road to VR: And foveated rendering perhaps?

Chambers: That you’re ahead of me on.

Road to VR: So foveated rendering, it’s this concept where with eye tracking, you know where the eye is looking and because our eyes only really see really high detail in the center – the fovea – we can render our peripheral vision at a much lower resolution. So I was thinking if you could have a really special data [format]… With only that really high resolution center and low resolution peripheral, you know that could reduce some of the bandwidth requirements right?

Chambers: Yeah.

Road to VR: So I would think that would help to lower the barrier to entry a bit. So I WAS going to ask if there was anyone who was working in that space who had talked to you, but I guess not!

Chambers: We haven’t yet, but it sounds like an interesting idea and certainly conceivable. I don’t see why it would be difficult to do.

Road to VR: So there was one thing I was wondering, and perhaps this is because of my own lack of technical knowledge, but the way the Oculus Rift consumer version and HTC Vive work is that they have two separate screens, and they’re updated globally and not through a [rolling] shutter. Does that affect anything? Like you mentioned your solution was to solve that sort of landscape to portrait conversion. But what about those [headsets] that may not necessarily apply?

Chambers: Yeah I actually don’t know enough about it until we get into the proto discussions with those companies. I don’t see any impediments there. We have a path to much greater data throughput that gets us to higher resolutions and… We are already doing dual screen in our own HMD so we are pushing two streams of data simultaneously to two screens. So it all sounds entirely doable.

Road to VR: The two screens – there’s no difference in delay between the two eyes is there?

Chambers: No they’re perfectly synced. They’re coming out of the GPU – a dual output GPU – so they’re being rendered at the same time in one large image.

Another thing that’s interesting is that we’re moving forward with a new method that we can push more than just the video across our wireless link, that we’ll be able to include audio and command data. We can perform other functions on the HMD than just serving video. We’re going to combine all those streams of data and have that wirelessly synced to the HMD. Our whole goal is to not have any tethers.

Road to VR: What sort of range are hoping for to be able to not have the signal degraded – like how far can you walk away from the transmitter?

Chambers: On the current 60 GHz wireless link, we tested it up over 53 feet, so for the purposes we were looking for, that was plenty. So for most VR applications that’s sufficient. Longer than that, we’d have to do something different. But again that technology is just off the shelf commercial wireless link from, you know, the DVDO type wireless links out there, so we’re living with that because that’s what’s out there. We have our own path to go beyond that, that we’re working on this year.

Road to VR: So with the demo setup you have here, I saw some weird things, like to the peripheral vision…

Chambers: Well again, since on this particular version, we provided the wireless video, but I don’t have any access to the SDK, so we weren’t using their tracker and we’re not using their code to do you know any of the image distortion. So that work still has to be done. You’re seeing some anomalies in the image because we’ve gone around it. We’re not using the SDK. We don’t have any access to the SDK. That can all be fixed with access to the SDK.

Road to VR: So you actually have to integrate with-

Chambers: We have a little more work to do yes.

Road to VR: So you can’t just connect it to any video signal and have it work.

Chambers: It’s working to a degree but you see some anomalies like that. Mostly in the edges you see some odd colors/distortions. That’s just something we haven’t worked with yet.

Road to VR: How has the [reception] been at your booth?

Chambers: Most of them, they get the demo and they say “I get it, I see what you’re doing, that’s great.” And they’re convinced – like “wow.” VR is here to stay. So it’s pretty cool – the light goes on and they get it.

It’s early days yet for Serious Simulations application to consumer headsets and to achieve that dream of a truly untethered high-end VR solution, integration with Oculus’ tight software to hardware stack seems as if it could be challenging, especially as Serious will present a non-standard solution. However, technology using 60Ghz wireless communication could pose an extremely interesting option for the 2nd generation of VR headsets that will likely not be too far behind those launching this year.

You can grab more information on Serious Simulations and their technology by heading to their website here.