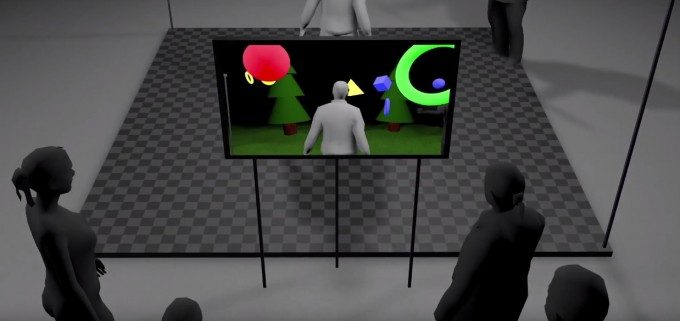

This project uses Kinect depth cameras to the fuse virtual and the real to produce real-time mixed reality footage with no green screens required, all using Microsoft’s depth camera Kinect.

Mixed reality is all the rage and has become one of the most effective methods to convey the power of immersion afforded by new virtual reality technologies. Spearheaded most recently by the Fantastic Contraption team for their excellent teaser series, the Vive’s room-scale positional tracking used in conjunction with green screen backdrops to fuse the virtual and the real.

A new experimental project has come up with an alternative method, one that does away with the requirement for draping your demo area with green sheets and leverages Microsoft’s Kinect depth camera to achieve a similar effect, in real time. The new technique allows potential exhibitors to show off a user interacting with a virtual application an retain any demo space design (say, a stand at a conference venue) and still produce a compelling way to visualise what makes VR so special.

“We built a quick prototype using one of HTC Vive’s controllers for camera tracking, Microsoft Kinect v2, a Kinect v2 plugin and Unity running on 2x machines,” says the team, who have demonstrated their work via the above YouTube video. “The server ran the actual VR scene and the client extracted data from the Kinect, placed the point cloud into the scene, resulting in a the mixed reality feed. The depth threshold was altered dynamically based on the position of the headset.”

Of course, the compositing effectiveness is not as precise as a professionally produced green-screen equivalent, and there will be occasional pop-in from other objects which creep into the demo space, but it’s a neat, low cost and potentially more practical approach to getting your VR app noticed at a venue.

However, the biggest drawback for the technique will likely be it’s Achilles heel, specifically the requirement for the target VR application to provide integration for displaying the point cloud imagery alongside the view captured by the virtual camera. No mean feat.

Nevertheless, it’s an intriguing approach that once again reminds us how Microsoft’s gaming peripheral seems to have found a life much more productive than it’s original, ill fated designed purpose.

You can read in detail all about the project over at the team’s YouTube channel here.