I am incredibly excited about the near-future of VR after seeing these three new videos from Sixense, makers of the forthcoming STEM VR controller. The new videos demonstrate the exciting user experiences that become possible when players move from abstracted controllers to natural motion input.

I’ve ranted at length about the need for natural motion input in virtual reality. The Survois Holodeck, which rigs you up to full motion input for VR, was one of the most fun VR experiences I’ve had. Natural motion input leads to intuitive user interactions that are not only more immersive but also more fun than using a button and stick based controller. STEM, the next-gen motion input controller from Sixense (the folks behind the Razer Hydra), is taking things to a wonderful new level.

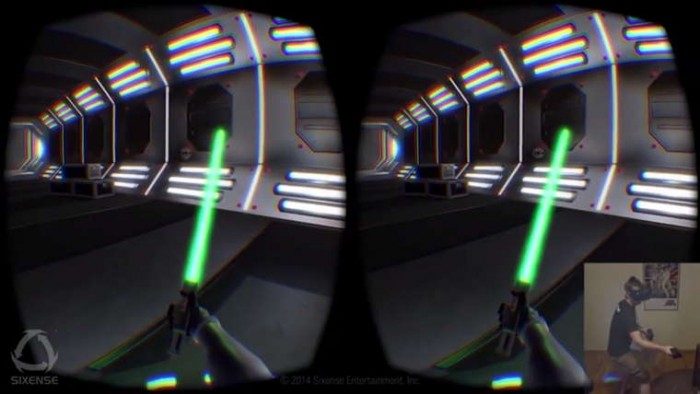

Become a Jedi Master

Even if you are (somehow) not a Star Wars fan, you’ve at least got to be excited at the prospect of being able to reflect incoming laser fire with a lightsaber. Just wait until they get Force Push going…. No more ‘hold X to reflect lasers,’ you get to do it yourself.

Reload for Real

It’s amazing how limited traditional controllers are. Even though many of the world’s most popular games are first-person-shooters, wielding guns has been relegated to an incredibly oversimplified experience. ‘Press X to reload,’ ‘Press Y to switch weapons.’ Complex actions are reduced to a single button press. With accurate natural motion input, you can enable mechanics that games have never been able to achieve until now.

Sixense is just starting scrape the surface of this. A developer demo that will ship with their SixenseVR SDK comes with a wieldable Desert Eagle that looks like a blast to shoot. Rather than a button to equip the gun, you pick it up for real. Rather than a button to reload, you have to first eject the old clip and physically insert another. I hope to see this concept taken even further… imagine loading individual bullets into your clip or perhaps even deconstructing your weapon to clean it—from that sentence alone, never-before-possible gameplay mechanics are popping into my head.

A New Bar for Game Interaction

Even the simple stuff—flipping switches, stacking blocks, breaking bottles—can be significantly more fun and interactive when you are really doing it rather than pressing an abstracted button. This last video shows some extremely simple interactions, but damn if they don’t look fun to do. The first person to make a demolition room with awesome physics where the player gets a sledgehammer gets my money guaranteed.

Sixense ran a successful Kickstarter for the STEM system back in September, 2013. The company blasted past its $250,000 goal to raise more than $600,000 for the next-gen VR controller. The unit features significant improvements over the prior Razer Hydra, including wireless, more tracking points, and better performance.

See Also: Hands-on – IMU Added to Sixense STEM VR Motion Controller Underscores Impressive Performance

Sixense says that all of the above developer examples will be included in the forthcoming SixenseVR SDK which is used to integrate the STEM and other motion controllers into VR games. It will also include custom prefabs that can be dropped into any game, according to the company. More examples are also in the works, including a ping-pong demo that Sixense says is particularly compelling.

A few of you Hydra users paying close attention will also have noticed one simple but genius trick that Sixense is building into their STEM SDK. The base of the unit can now be made visible in the game world. This makes it dead simple to set the controllers back down onto the base when you’re done playing, rather than fumbling to find their resting position before removing your headphones and VR headset. This is going to make the start and end of your VR gameplay sessions much more smooth.

Here are some of the features that Sixense has planned for the SixenseVR SDK, as noted in the latest update on the STEM Kickstarter:

- Leg, arm, torso, and physical prop tracking. Allows you to place STEM Controllers or STEM Packs wherever you like to experiment with other types of interaction using your entire body, and even other types of controllers.

- User avatar adjustment control panel. When allowed by the game/application designer, users can override the gender and proportions of their avatar to match their own body, for a much more natural sense of body awareness.

- Two-handed interactions with various types of virtual objects.

- Two-way collisions with the environment, allowing other objects to affect the avatar’s body.

- Multi-user support. Simple interface for efficiently synchronizing a user’s body pose over a network, enabling nuanced face-to-face interaction with subtle body language cues across distant physical locations.

- Additional game engines: Unreal Engine 4, Source SDK and CryEngine.