The Trinity Magnum is the latest VR motion controller to enter the arena. Following devices like STEM and PrioVR, the Magnum aims to bring an optically tracked solution to the market and one that’s significantly less expensive. Recently I got to try the Magnum for myself and sat down with TrinityVR’s Chief of Product, Julian Volyn to learn more.

The Trinity Magnum approaches the VR motion controller in a similar way as VR smartphone adapters do the VR headset space. Rather than shelling out for lots of hardware, why not leverage hardware people already have?

The foundation of TrinityVR’s motion input is an optical tracking solution which taps into the OpenCV project, an open-source library for computer vision processing. The company says that many consumers already have OpenCV-compatible cameras built into their laptops, if not even higher performance cameras like the PlayStation Eye or Microsoft Kinect. As Julian Volyn, TrinityVR’s Chief of Product points out, the great thing about this approach is that the performance of the Trinity Magnum increases as webcams and OpenCV improve.

TrinityVR launched a Kickstarter last week; the company aims to raise $60,000 to get the Trinity Magnum developer kit into developers’ hands.

Though Volyn had to spend some time dialing values to compensate for the lighting of the room we were in, once it was calibrated the tracking performance was impressive. Above you can see a video from the company’s Kickstarter showing the robust tracking, even when passing through high contrast regions. So long as TrinityVR can nail automatic scene calibration, this type of optical tracking could work very well as a VR input solution.

TrinityVR even expects that they can use the Oculus Rift DK2’s IR camera for tracking; they say a contact at Oculus has confirmed that it will be possible. It’s great to know that Oculus is leaving the DK2 IR camera open and accessible to developers—hopefully something we’ll see even into the release of the consumer Oculus Rift (CV1).

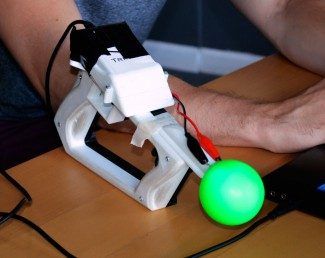

In my time with the Trinity Magnum prototype, the controller was tracked with the PlayStation Eye camera. TrinityVR points out this is a surprisingly inexpensive camera with solid performance. You can pick it up for $10 and enjoy 60 or 120 frames per second (depending upon resolution) with up to a 75 degree field of view. No wonder—the PlayStation Eye is also compatible with the PlayStation Move, which uses the same tracking technique as the Trinity Magnum. The difference of course, is that the Move can’t be used on PC without a hack.

While optical tracking takes care of the position of the Magnum, an IMU tracks its orientation. The company says that, with the PlayStation Eye, the Magnum is capable of rotational accuracy under 2 degrees at 95hz and positional accuracy under 3mm at 75hz, all with latency under 30ms. With a better camera, the positional performance would improve, says TrinityVR.

In my time with the Magnum prototype, I was impressed with the accuracy of the system. I was dropped into their Boot Camp demo where I could run around and shoot exploding barrels. The fusion of the positional and rotational data allowed me to aim at distant barrels, using the virtual laser sight, with ease. From time to time I did find what seemed to be an odd “sweet spot” in the IMU where the virtual gun would fight back when I wanted to rotate it. This is presumably just a quirk of the IMU and perhaps a result of the 3 month-old prototype that’s been to numerous tradeshows.

In the Boot Camp demo, turning was configured so that when you tilt the gun, you turn left or right accordingly. This felt a bit unintuitive to me, but it was necessary as the prototype only had one joystick which was used for walking—and of course developers are free to implement their own control schemes.

I also tried the Z0NE demo (formerly Rift Wars) which will have support for the Magnum. It allowed me to control a gatling gun on the front of the ship and blast cubes in front of me. It was definitely more intuitive than using my head for aiming, though currently the implementation doesn’t utilize the Magnum’s positional tracking capability.

The ergonomics on the prototype definitely need some work. To that end TrinityVR has partnered with Cinder Solutions who are running prototype designs through focus groups and user testing. The final design hasn’t been decided upon yet, but based on the mockup (pictured above), they are heading in the right direction.

My biggest concern with an optical tracking solution is of course occlusion (when the tracking orb is hidden from the camera). Right now the Trinity Magnum is really only suitable for a sitting experience. If you were to stand up and use your body for rotation instead of a joystick, the camera would lose sight of the tracking orb—though the same issue remains for positional tracking on the Oculus Rift DK2 and Sony’s Morpheus. Volyn did say it’s possible to use multiple cameras (or an overhead camera) to solve this problem. TrinityVR will reveal more details soon regarding overhead and multicam setups in an update on their Kickstarter campaign.

Overall I came away impressed with the Magnum’s performance, especially considering its cost. It will be very interesting to see how performance continues to improve as OpenCV advances and as even better consumer cameras become available. So long as calibration is handled with little user intervention, the Trinity Magnum could be the value option for VR input. Integration with the Oculus Rift DK2’s IR camera would certainly be a big plus to those that already own the headset.

Full Disclosure: TrinityVR is running Magnum ads on Road to VR.