While most of us are used to dealing with resolution figures that describe pixel count (ie: a 1920×1080 monitor), pixel density stated as pixels per degree is a much more useful figure, especially when dealing with AR and VR headsets. Achieving ‘Retinal resolution’ is the ultimate goal for headsets, where at a certain pixel density, even people with perfect vision can’t discern any additional detail. This article explores those concepts, and takes a look at how far today’s headsets are from retinal resolution.

Guest Article by Yuval Boger

Yuval is CEO of Sensics and co-founder of OSVR. Yuval and his team designed the OSVR software platform and built key parts of the OSVR offering. He frequently shares his views and knowledge on his blog.

If the human eye was a digital camera, its ‘data sheet’ would say that it has a sensor capable of detecting 60 pixels/degree at the fovea (the part of the retina where the visual acuity is highest). For visual quality, any display above 60 pixels/degree is essentially wasting resolution because the eye can’t pick up any more detail. This is called retinal resolution, or eye-limiting resolution.

This means that if there an image with 3,600 pixels (60 x 60) and that image fell on a 1° x 1° area of the fovea, a person would not be able to tell it apart from an image with 8,100 pixels (90 x 90) that fell on a 1° x 1° area of the fovea.

Note: 60 pixels per degree figure is sometimes expressed as “1 arc-minute per pixel”. Not surprisingly, an arc-minute is an angular measurement defined as 1/60th of a degree. This kind of calculation is the basis for what Apple refers to as a “retina display”, a screen that when held at the right distance would generate this kind of pixel density on the retina.

If you have a VR headset, you can calculate the pixel density—how many pixels per degree it presents to the eye—by dividing the number of pixels in a horizontal display line by the horizontal field of view provided by the lens. For instance, the Oculus Rift DK1 dev kit (yes, I know that was quite a while ago) used a single 1280 x 800 display (so 640 x 800 pixels per eye) and with a monocular horizontal field of view of about 90 degrees, it had a pixel density of just over 7 pixels/degree (640 ÷ 90). You’ll note that this is well below the retinal resolution of 60 pixels per degree.

Not to pile on the DK1 (it had many good things, though resolution was not one of them), 7 pixels/degree is the linear pixel density. When you think about it in terms of pixel density per surface area, it’s not just 8.5 times worse than the human eye (60 ÷ 7 = 8.5) but actually a lot worse (8.5 × 8.5 which is over 70).

The following table compares pixel densities for some popular consumer and professional HMDs:

| VR Headset | Horizontal Pixels Per Eye | Approximate Horizontal Field of View (degrees per eye) | Approximate Pixel Density (pixels/degree) |

| Oculus DK1 | 640 | 90 | 7 |

| OSVR HDK1 | 960 | 90 | 11 |

| HTC Vive | 1080 | 100 | 11 |

| Sensics dSight | 1920 | 95 | 20 |

| Sensics zSight | 1280 | 48 | 27 |

| Sensics zSight 1920 | 1920 | 60 | 32 |

| Human fovea | – | – | 60 |

Higher pixel density allows you to see finer details—read text; see the grain of the leather on a car’s dashboard; spot a target at a greater distance—and in general contributes to an increasingly realistic image.

Historically, one of the things that separated professional-grade VR headsets from consumer headsets was the a higher pixel density. Let’s simulate this using the following four images. Let’s assume that the first image (taken from Epic’s Showdown demo) is shown at full 60 pixels/degree density (which it could be, depending upon the resolution and distance you sit from your monitor). We can then re-sample it at half the pixel density (simulating 30 pixels/degree) and then half again (15 pixels/degree) and half again (7.5 pixels/degree). Notice the stark differences as we go to lower and lower pixel densities.

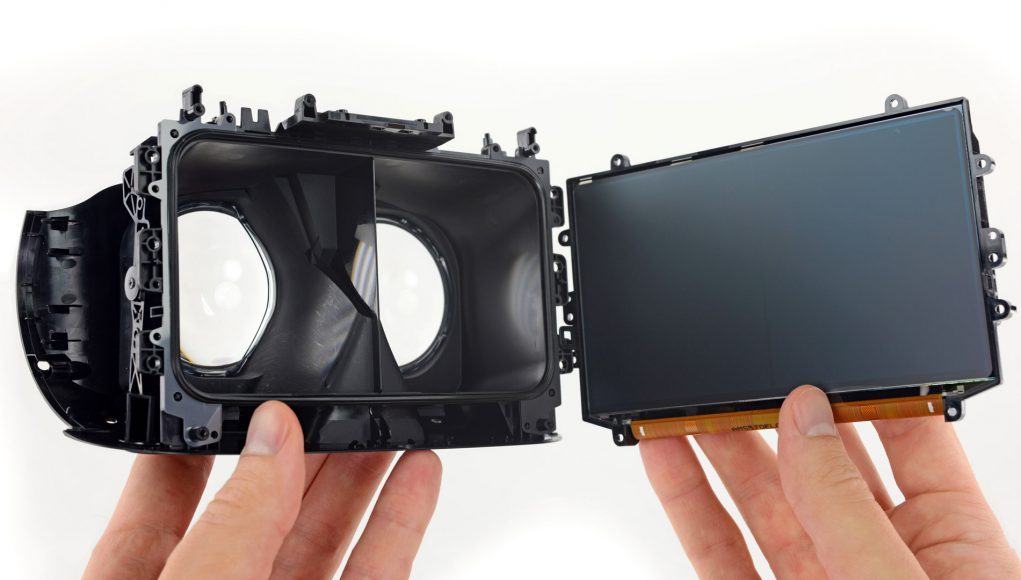

Higher pixel density for the visual system is not necessarily the same as higher pixel density for the screen because pixels on the screen are magnified through the optics. The same screen could be magnified differently with two different optical systems resulting in different pixel densities presented to the eye. It is true, though, that given the same optical system, higher pixel density of pixels on the screen does translate to higher pixel density presented to the eye.

As screens get better and better, we will get increasingly closer to eye-limiting resolution in the headset and thus closer to photo-realistic experiences.