7invensun, one of the latest companies accepted into HTC’s Vive X accelerator program, is launching an eye-tracking add-on made specially for the Vive headset. The device, which went on sale last year, uses a series of IR LEDs and a near-eye camera to track the user’s eyes in real-time, enabling a range of potential benefits for VR.

Update (5/30/18): 7invensun has confirmed that the company’s latest iteration of its aGlass eye-tracking add-on, the aGlass DK2, is compatible with the Vive Pro in addition to the original Vive. All that’s required is an adapter to adapt the device’s original plug for the Vive Pro’s USB-C port.

The company says they’re also now offering ‘Advanced’, ‘Analytical’ and ‘Ultimate’ Editions of the eye-tracking add-on which offer greater capabilities than the consumer version, like pupil diameter, pupil location, eyelid distance, iris recognition and customizable calibration.

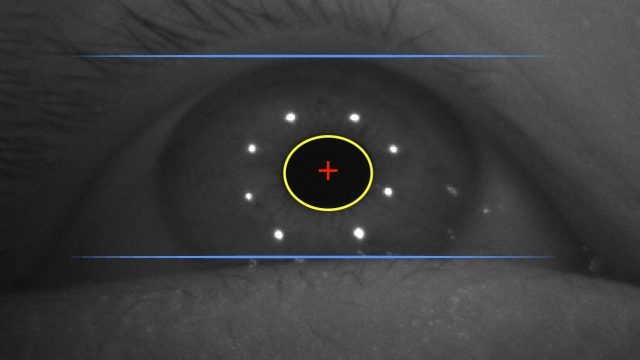

Original Article (4/27/17): Meeting with 7invensun at HTC’s Vive X accelerator space in San Francisco today, I got to see the aGlass eye-tracking development kit in action. The device, which comes as two compact assemblies which easily insert around the Vive’s lenses, uses a ring of IR LED’s to illuminate each eye while a small camera records the movements and sends the data back to the computer for processing. The devices are smartly powered from the Vive’s extra USB port that’s hidden under the cable retainer at the top of the headset.

Foveated Rendering and More

Eye-tracking can be used for a number of useful purposes in VR, perhaps one of the biggest being foveated rendering, which reduces the resolution around your peripheral vision where it doesn’t need to be as sharp, while leaving the center part of your vision at full quality. This can significantly reduce the processing power required to render a high-quality, high-framerate VR scene. Among the VR industry, foveated rendering via eye-tracking is largely considered a necessary technology to one day achieve retinal-resolutions in VR headsets by reducing the processing power needed to render on ultra-high pixel density displays.

Foveated rendering is already up and running with the aGlass dev kit, and is presently said to work on any application with no modifications, so long as the computer is equipped with an NVIDIA GPU. The company will aim to extend the capability to AMD cards as well. My current understanding is that other functions that employ aGlass’s eye-tracking data are GPU-independent, but foveated rendering is a special case because of the current rendering technique.

Foveated rendering is already up and running with the aGlass dev kit, and is presently said to work on any application with no modifications, so long as the computer is equipped with an NVIDIA GPU. The company will aim to extend the capability to AMD cards as well. My current understanding is that other functions that employ aGlass’s eye-tracking data are GPU-independent, but foveated rendering is a special case because of the current rendering technique.

Beyond foveated rendering, eye-tracking can be used to more realistically animate the eyes of your VR avatar, simulate depth-of-field effects, be used for conscious and unconscious input inside of VR apps, and more.

Corrective Lenses

![]() Beyond eye-tracking, the aGlass add-on also opens the door to corrective lenses for those who normally wear glasses. Today’s high-end VR headsets lack variable focus control, so those with glasses are either stuck trying to fit them inside the headset or not playing with them at all (and suffering a blurry virtual image). The aGlass add-on is designed for easy insertion of custom-made lenses that rest over the Vive’s original lenses, and the development kit comes with three focus powers. And if you don’t need corrective lenses, the device works entirely fine without them.

Beyond eye-tracking, the aGlass add-on also opens the door to corrective lenses for those who normally wear glasses. Today’s high-end VR headsets lack variable focus control, so those with glasses are either stuck trying to fit them inside the headset or not playing with them at all (and suffering a blurry virtual image). The aGlass add-on is designed for easy insertion of custom-made lenses that rest over the Vive’s original lenses, and the development kit comes with three focus powers. And if you don’t need corrective lenses, the device works entirely fine without them.

Hands-on With the aGlass Dev Kit

I got to try the aGlass dev kit myself at the Vive X accelerator office in San Francisco today.

The calibration process took several tries and quite a bit of fidgeting with the headset to get right. In my experience this is not entirely unique to aGlass, but speaks to the underlying challenges of designing a robust eye-tracking system that can handle a wide range of face and eye structures, not to mention those with eye problems (just an FYI, I’m fortunate to have 20/20 vision).

Once we did get the system calibrated—which involves looking at a series of dots in the screen and takes only a few seconds, if it works in the first try—foveated rendering was demonstrated using NVIDIA’s VR Funhouse. Foveated rendering only works well if the eye-tracking is fast and accurate enough that it’s hard for the user to notice any foveated rendering happening.

I was told the aGlass has a 5ms latency, and this was fast enough that—under the right rendering settings—I could barely tell that the foveated rendering was turned on, which is great. The particular method they were using to achieve foveated rendering was described as “proprietary,” and it did work better than a number of other attempts I’ve seen, which often introduce a blurry feeling in the periphery which gives the effect away easily and doesn’t feel quite right.

The particular laptop computer powering this demo lacked enough power to be formally called ‘VR ready’, and when foveated rendering was disabled I could easily see lots of jitter due to the computer not being able to keep up with the rendering workload. Once foveated rendering was enabled, it allowed the computer to achieve the necessary 90FPS consistently.

Improvements to Come

The installation seems dead simple, though the current dev kit adds a little bulk around the Vive’s lenses which slightly restricts the IPD setting on the lower end. The average IPD is often quoted around ~64mm, but with the aGlass installed I was only able to bring the Vive IPD down to 65mm, though the company says they aim to fix this for the consumer version of the device.

Another issue for me was the need to dial the Vive’s lens-relief out a few notches to get the calibration to work correctly. Doing so means reducing the headset’s field of view which is of course no ideal. The problem may be due to the angle of the eye-tracking camera which is mounted on the aGlass bezel below the eye. The company says they hope to improve the robustness of calibration by increasing the ‘sweet spot’ of the system so that it can better handle varying eye and facial structures.

On the aGlass dev kit there’s also two soft rubber flaps that I could feel gently pushing against the inside corner of my eyes. It seems these may be needed to keep the IR light from interfering with the Vive’s head-sensor (which is positioned on the headset right between your eyes). It didn’t hurt but was slightly discomforting since you don’t usually have something touching that part of your eyes. The company says that the consumer version won’t require the flaps.

aGlass Dev Kit Release Date

![]() 7invensun says the aGlass eye-tracking dev kit add-on for Vive will go on sale in China this month, priced around $220. In the US, the company expects to offer the dev kit for sale in Q3. There’s no word yet on when a consumer version of the device will become available.

7invensun says the aGlass eye-tracking dev kit add-on for Vive will go on sale in China this month, priced around $220. In the US, the company expects to offer the dev kit for sale in Q3. There’s no word yet on when a consumer version of the device will become available.